The latest GRIT Report makes one thing clear: our industry is facing a trust crisis. “Sample & Data Quality” has climbed into the top tier of unmet needs, reflecting what many researchers already know—too often, the data we rely on isn’t as reliable as it should be. The pressure to deliver insights faster and cheaper has pushed traditional methods to the edge, and the rise of AI-generated responses and participant fraud has only deepened the cracks. The result is a growing sense of uncertainty about what, and who, to trust.

At its core, this is not a technology problem—it’s a relationship problem. For years, the industry has treated participants as transactions rather than partners, prioritizing efficiency over authenticity when it comes to engaging and building relationships with them. Compounding that, we’ve seen an influx of fraudulent respondents infiltrating recruiting sources, making it harder than ever to know whether we’re hearing from genuine participants or opportunists gaming the system for incentives. The outcome is predictable: disengaged or unauthentic respondents, questionable data and an erosion of confidence in what research can truly deliver. When “close enough” becomes “good enough,” everyone loses—the researcher, the client and ultimately the consumer whose voice we claim to represent.

Rebuilding trust requires more than better fraud detection or tighter screeners. It demands a rethinking of how we find and connect with people—and that’s where technology can help us do it better. The future of quality lies in platforms that merge human connection with intelligent automation. Technology now allows us to move beyond one-off interactions and create always-on environments where participants feel seen, valued and accountable. Research communities are leading this evolution, providing spaces where engagement is sustained, feedback is contextual and quality is verified in real time—not left to chance.

Within these environments, researchers can cultivate genuine participation and relationships. They can profile and track individuals longitudinally, observe behavioral changes over time and validate findings across multiple touchpoints. The result isn’t just cleaner data—it’s a deeper understanding of the people and motivations behind it. In a world flooded with noise, that level of connection is what separates meaningful insights from surface-level findings.

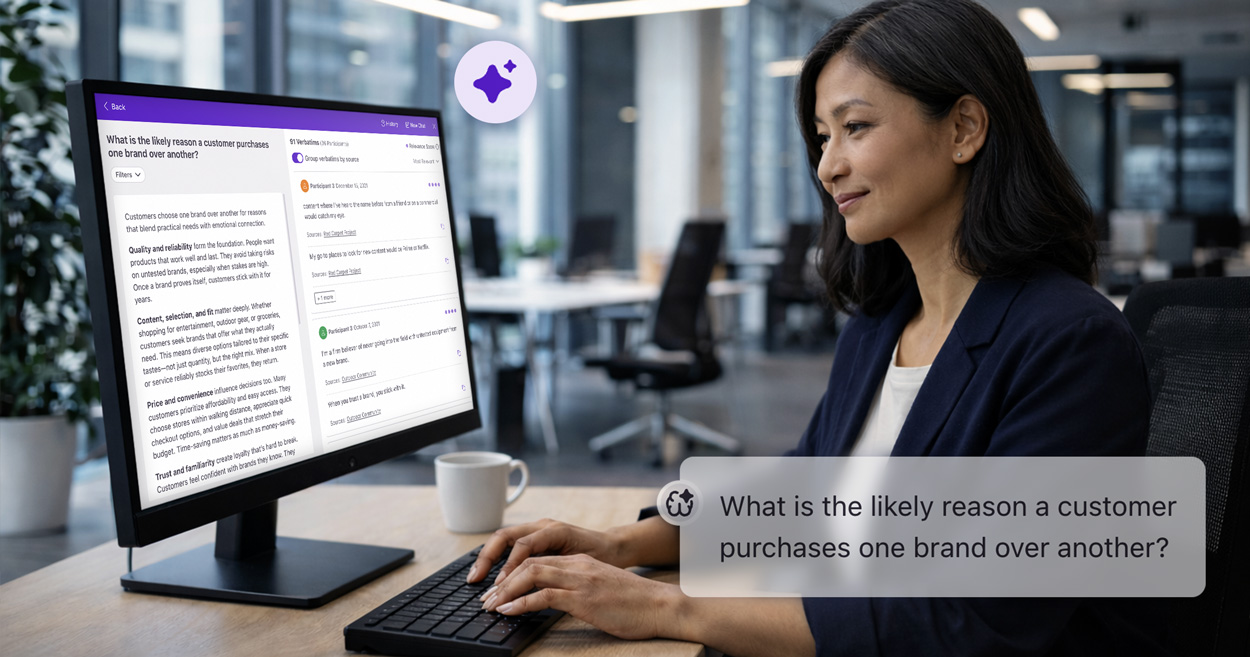

It’s no secret that AI plays a major role in this transformation. When embedded within the research process, it helps researchers identify patterns, detect fraud and validate authenticity faster and more accurately than ever before. It acts as an assistant —streamlining workflows, saving time and surfacing nuances that might otherwise be missed. Used responsibly, AI elevates human judgment instead of replacing it, helping researchers achieve what would be nearly impossible to do manually at meaningful scale while maintaining the nuance and depth that define great qualitative work. The key is transparency—using AI as a collaborator that strengthens confidence in every response collected.

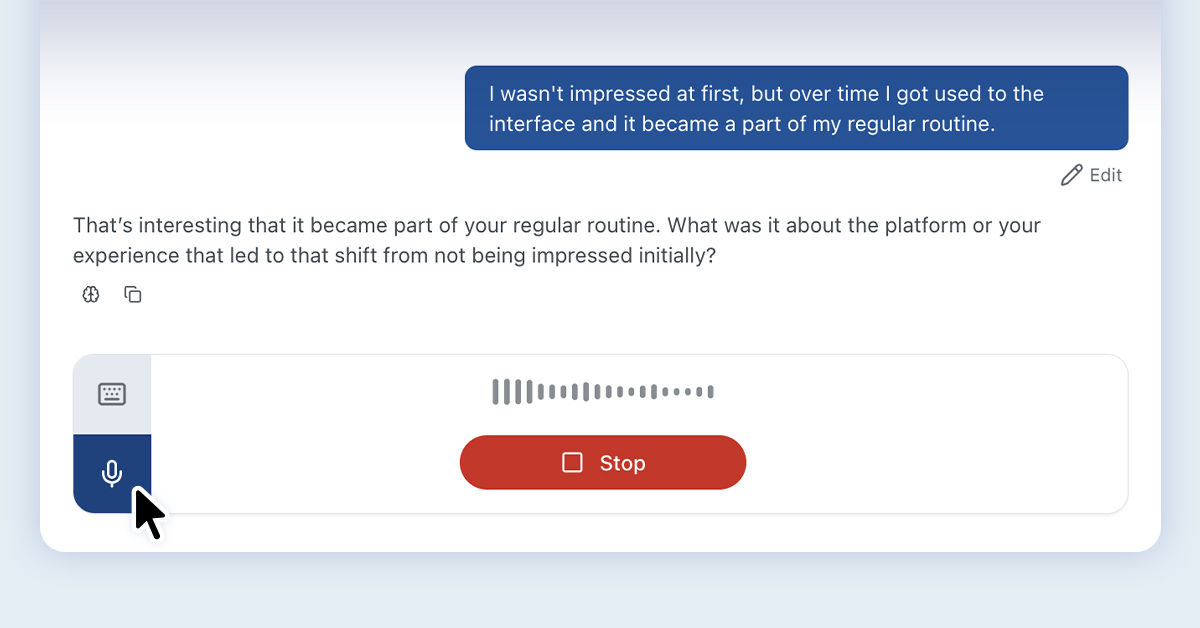

At Recollective, we’ve built safeguards that give researchers confidence in the quality of both their participants and their data. Our platform includes quality controls throughout the participant journey—from video-based screening and time-on-task tracking to fraud prevention tools that block copy-paste responses and flag inauthentic behavior. Researchers can highlight, rate and monitor participants over time, building a record of performance that reinforces trust and accountability. Our Conversational AI Task Type acts as a real-time quality safeguard, detecting off-topic or misaligned responses and automatically prompting course correction to maintain data integrity.

By utilising technology like Recollective that combines continuous engagement with intelligent automation, researchers can ensure they’re speaking to the right people, collecting authentic reponses and delivering insights with confidence. Those trusted relationships restore both the integrity of our data and the credibility of our discipline.

The GRIT findings remind us that the top unmet needs—budget, time and quality—are all connected. Technology is not the enemy of trust; it’s the bridge helping to rebuild it and the future of insight isn’t “good enough”—it’s smarter, faster and more human than ever.

.svg)